U bent hier

Nieuws (via RSS)

Amersfoort: contact met softwareleverancier over datalek verliep moeizaam

Wat kan ik doen tegen een drone die over mijn achtertuin vliegt?

Solana Web3.js library voorzien van backdoor die private keys steelt

Lorex wifi-beveiligingscamera's via kritiek lek op afstand over te nemen

Rechtbank spreekt verdachte vrij van doxing officier van justitie

Sterk bekritiseerde 'datasurveillancewet' WGS vanaf maart van kracht

Our analysis of the 1st draft of the General-Purpose AI Code of Practice

Two weeks ago, the European AI Office published the first draft of the General Purpose AI Code of Practice. The first in a series of four drafting rounds planned until April 2025, this “high-level” draft includes not only principles, a structure of commitments (a descending hierarchy of measures, sub-measures and key performance indicators) and high-level measures, but already proposes a few detailed sub-measures, particularly in the area of transparency and copyright (Section II of the draft Code).

Following the release of the draft, the AI Office organised dedicated working group meetings with the stakeholders that take part in the Code of Practice Plenary. These stakeholders were also invited to submit written feedback on the draft through a series of closed-ended and open-ended questions. COMMUNIA took part in the (WG1) and submitted a written response (download as a PDF file) to the questions related to the transparency and copyright-related rules applicable to GPAI models. In this blogpost, we highlight some of our responses to the survey as well as some of the concerns expressed by other stakeholders during the WG1 meeting.

Copyright compliance policyArticle 53(1)(c) of the AI Act requires providers of GPAI models to put in place a policy to comply with Union law on copyright and related rights, and in particular to identify and comply with text and data mining rights reservations expressed pursuant to Article 4(3) of the DSM Directive. Measure 3 partially re-states this article and sub-measure 3.1. further clarifies that (a) the copyright compliance policy shall cover the entire lifecycle of the model and (b) when modifying or fine-tuning a pre-trained model, the obligations for model providers shall cover only that modification or fine-tuning, including new training data sources.

From COMMUNIA’s perspective, this measure and sub-measure are close to where they need to be. Sub-measures 3.2. and 3.3. on upstream and downstream copyright compliance require, however, a more detailed analysis.

Downstream copyright complianceLet’s start by looking at sub-measure 3.3., which reads as follows:

Signatories will implement reasonable downstream copyright measures to mitigate the risk that a downstream system or application, into which a general-purpose AI model is integrated, generates copyright infringing output. The downstream copyright policy should take into account whether a signatory vertically integrates an own general-purpose AI model into its own AI system or whether a general-purpose AI model is provided to another entity based on contractual relations. In particular, signatories are encouraged to avoid an overfitting of their general-purpose AI model and to make the conclusion or validity of a contractual provision of a general-purpose AI model to another entity dependent upon a promise of that entity to take appropriate measures to avoid the repeated generation of output which is identical or recognisably similar to protected works. This Sub-Measure does not apply to SMEs.

At the WG1 meeting, several representatives of AI model providers and civil society organizations opposed this sub-measure, arguing that its scope goes beyond the scope of protection of Article 53(1)(c) of the AI Act. Under the AI Act, only model providers are required to put in place a copyright compliance policy; downstream systems and applications are not targeted by this provision. It was also highlighted that open source model initiatives, which typically lack a stable core group of maintainers, are not in a position to comply with long term compliance policies that include downstream obligations.

Beyond that, this sub-measure also raises user-specific concerns. Firstly, it assumes that a similar output is necessarily an infringing output, which is incorrect. A similar output can only be qualified as an infringing output if it does not qualify as an independent similar creation or if the user engages in a copyright-relevant act and no copyright exception or limitation applies. System-level measures to prevent output similarity thus entail non-negligible risks to fundamental rights.

Contrary to model-level measures, system-level measures (e.g. input filters and output filters) are triggered by an interaction with an end-user. Users rights considerations come into play when output similarity is the result of an intentional act of “extraction” (e.g. specific instructions) by the end-user to cause a downstream system to generate outputs similar to copyrighted works. The user may employ selective prompting strategies to elicit an AI system to generate an output similar to a copyright-protected work without infringing copyright.

In fact, depending on the purpose of the use, this act of “extraction” of similar expressions in the output can be considered a legitimate use of a copyrighted work. Using an AI system to create a close copy of a copyrighted work for private consumption does not infringe upon any exclusive rights. The user would need to further reproduce, distribute, communicate or make available to the public the output for it to have copyright relevance. The use could also be covered by the quotation right, by the exception for caricature, parody and pastiche, by the incidental inclusion exception, or even by the education or research exceptions if the output is aimed at serving an education or research purpose.

Requiring model providers to pass onto “downstream” providers the obligation to prevent output similarity has therefore the potential to affect legitimate uses and cannot be proposed without proper users rights safeguards, let alone without a legal basis.

Upstream copyright complianceSub-measures 3.2 reads as follows:

Signatories will undertake a reasonable copyright due diligence before entering into a contract with a third party about the use of data sets for the development of a general-purpose AI model. In particular, Signatories are encouraged to request information from the third party about how the third party identified and complied with, including through state-of-the-art technologies, rights reservations expressed pursuant to Article 4(3) of Directive (EU) 2019/790.

Despite its heading (“Upstream copyright compliance”), sub-measure 3.2 does not require model providers to impose obligations on “upstream” parties. It only creates a due diligence obligation regarding data sources originating from “upstream” providers. Therefore, it does not raise the same concerns as sub-measure 3.3 regarding the creation of new copyright compliance obligations outside the scope of the AI Act.

Sub-measure 3.2. should, however, be lightly edited to take into account the specificities of open data sets. It should be clarified that the sub-measure does not apply to data sets made available under non-exclusive free licenses for the benefit of any users, unless the signatories enter into an individually negotiated contract with these “upstream” providers about the use of those data sets for the development of a GPAI model. In the absence of such a contract, model providers should remain responsible for identifying and complying with, including through state-of-the-art technologies, rights reservations expressed pursuant to Article 4(3) of Directive (EU) 2019/790.

Opting-out of AI TrainingWhen assessing compliance with rights reservations made by right holders under Article 4(3) of the DSM Directive, GPAI model providers are expected to adopt measures to ensure that they recognise machine-readable identifiers used to opt-out from AI training. However, sub-measures 4.1. and 4.3 address this issue in an unsatisfactory manner, by proposing model providers to commit to employ crawlers that respect robots.txt and make best efforts to comply with other rights reservation approaches.

At the WG1 meeting, several representatives of rights holders and civil society organizations criticised the focus on robot.txt due to the known limitations of the protocol. Indeed, robots.txt has a number of conceptual shortcomings that make it unsuitable to opt-out from AI training, including the following:

- Robot.txt does not allow for opting out of TDM or specific applications of TDM (e.g. AI training). Currently, robots.txt does not allow a web publisher to indicate a horizontal opt-out that applies to all crawlers crawling for a specific type of use.

- Robots.txt policies can only be set by entities that control websites/online publishing platforms. In many cases, these entities will not be the rightsholders.

- Robots.txt has limited usefulness for content that is not predominantly distributed via the open internet, such as music or audiovisual content.

Any sub-measure aimed at ensuring compliance with the limits of the TDM exception must require a respect for multiple forms of machine readable rights reservations. It is insufficient to target only one specific implementation of the concept of machine readable rights reservations. Ideally, the sub-measure should also require GPAI model providers to ensure that the data ingestion mechanisms employed are able to identify if the opt-out refers to all general purpose TDM activities or only certain mining activities (e.g. for purposes of training generative AI models).

Opt-out effectIt is clear that the effect of an opt-out is an element that needs to be taken into consideration by AI model providers in their internal copyright compliance policy. Yet, the first draft does not deal with this issue.

We argue that an opt-out from TDM or AI training shall require the AI model provider to remove the opted-out work (including all instances of the opted-out work) from, or not include it in, its training data sets. This means that the AI model provider should be required to stop using the opted-out work to train new AI models. However, it shall not require the AI model that has already been trained to “unlearn” the work. In other words, AI model providers shall not be required to commit to remove opted-out works from already trained models, since that is not technically feasible. Instead, they should be encouraged to record and publicly communicate the training dates, after which new opt-outs will no longer have to be complied with.

Other sub-measuresThe first draft includes some measures and sub-measures on transparency, but it currently lacks a restatement of the public transparency obligation arising from Article 53(1)(d) of the AI Act and a connection to the training data transparency template to be provided by the AI Office. In the absence of the training data transparency template, the current transparency measures cannot be assessed.

Finally, the first draft includes a sub-measure that encourages AI model providers to exclude from their crawling activities websites listed in the Commission Counterfeit and Piracy Watch List (the “Watch List”). However, the Watch List is a selection of physical marketplaces and online service providers reported by stakeholders. The Commission does not take any position on the content of the stakeholders’ allegations and the list does not contain findings of legal violations. The Watch List is just a Commission Staff Working Document – it is not an official statement of the College and does not have any legal effect. Therefore, the reference to it in the Code of Practice would not be adequate.

The post Our analysis of the 1st draft of the General-Purpose AI Code of Practice appeared first on COMMUNIA Association.

Miljoenensubsidie voor programmeren in het basis- en middelbaar onderwijs

VS meldt actief misbruik van path traversal-lek in Zyxel-firewalls

FBI geeft tips tegen 'AI-fraude': spreek geheim woord af met familie

Politiedatabase Camera in Beeld telt bijna 339.000 camera's op 74.000 locaties

Wodkaproducent Stoli vraagt mede wegens ransomware faillissement aan in VS

DPG Media, Temu, minister en kabinet genomineerd voor Big Brother Award

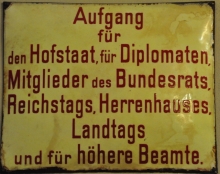

Advent Nr. 4: Kreuzgang Feuchtwangen

Siehe auch

https://www.kreuzgangspiele.de/

Commons

Fiktive Genealogie

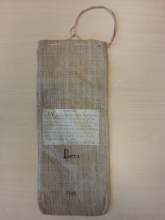

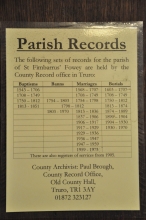

https://arcinsys.hessen.de/arcinsys/showArchivalDescriptionDetails.action?archivalDescriptionId=3313484 (mit Digitalisat)

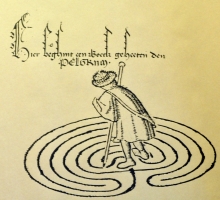

Staatsarchiv Darmstadt A 12, 347 ist laut Findmittel ein “literarisches Kuriosum, unbekannter Herkunft”: “Fiktiver Stammbaum für eine ‘Sardanapala’ usw. , für einen ‘Hans Latz’ und dessen legendäre Herkunft, erzählend und mit Fantasie-Wappen ausgestattet”. Der Schrift zufolge: 16. Jahrhundert.

Unter Edelfedern

Die Kommission für geschichtliche Landeskunde in Baden-Württemberg begann mit der Open-Access-Stellung ihrer Publikationen

Eine sehr gute Nachricht!

“Zum Auftakt werden rund 30 Bände der Reihe B (Monographien) von der Württembergischen und der Badischen Landesbibliothek digitalisiert, katalogisiert und online gestellt. Begonnen wurde das Projekt mit den jüngeren Publikationen dieser Reihe – zeitnah werden die älteren Veröffentlichungen sowie die Quellenbände der Reihe A folgen.

Zu finden sind diese Digitalisate bei der Württembergischen Landesbibliothek und der Badischen Landesbibliothek.”

Stuttgart gefällt mir besser:

https://books.wlb-stuttgart.de/omp/index.php/regiopen/catalog/series/vkfgl_b

Beispiele:

Kreutzer: Reichenau

Gutmann: Schwabenkriegschronik

Konzen: Hans von Rechberg

Widder: Kanzleien

Eckhart: Widmer

Bühler: Ortenauer Niederadel

VPN kostenlos nutzen

Bevor ich mir einen kostenpflichtigen VPN-Billigzugang kaufte, habe ich mit Proton gute Erfahrungen gemacht, wenn es darum geht, mittels US-Proxy auf hierzulande gesperrte Bücher von Google Boooks und HathiTrust zurückzugreifen. Auch bei kostenpflichtigen Proxys stellt Chrome die Seiten des Google-Books-Buchs nicht vollständig dar. Aber dann lädt man eben das Buch als PDF herunter und ins Internet Archive hoch …

Bei HathiTrust nützen kostenlose Webproxys nichts, die bei Google Books eine Alternative zu den registrierungspflichtigen VPN-Diensten sind. Ich habe gerade bei Google nach US Proxy gesucht und https://www.4everproxy.com/de/proxy ausprobiert. Nach Anklicken von Voransicht bei der Adresse https://books.google.de/books?id=5dFJAAAAMAAJ erscheint ein Link zum PDF. (Das Buch ist natürlich schon im Internet Archive.)

“Nordelbingen. Beiträge zur Geschichte der Kunst und Kultur, Literatur und Musik in Schleswig-Holstein” ab 2023 Open Access

https://macau.uni-kiel.de/receive/macau_mods_00003654

Im Jahrgang 2024 berichtet der unvermeidliche Enno Bünz über den Geistlichen Martin Scherer und die Stiftung des Kreuzwegs in Heide.

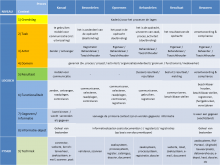

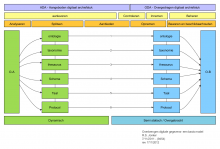

_EN44f1.png?itok=cRGbEwb8)

77a5.png?itok=oF9yYrlA)

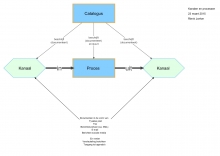

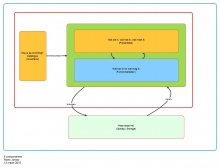

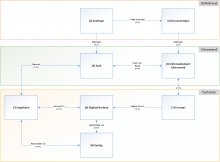

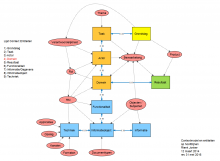

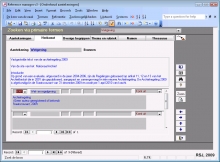

![Reference manager - [Onderhoud aantekeningen]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bOnderhoud%20aantekeningen%5d%2014-2-2010%20102617_0258a.jpg?itok=OJkkWhxY)

![Reference manager - [Onderhoud aantekeningen]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bOnderhoud%20aantekeningen%5d%2014-2-2010%20102628a0e3.jpg?itok=CUvhRRr7)

![Reference manager - [Onderhoud bronnen] - Opnemen en onderhouden](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bOnderhoud%20bronnen%5d%2014-2-2010%20102418f901.jpg?itok=d7rnOhhK)

![Reference manager - [Onderhoud bronnen]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bOnderhoud%20bronnen%5d%2014-2-2010%201024333c6b.jpg?itok=CgS8R6cS)

![Reference manager - [Onderhoud bronnen]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bOnderhoud%20bronnen%5d%2014-2-2010%20102445_0a5d1.jpg?itok=4oJ07yFZ)

![Reference manager - [Onderhoud bronnen]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bOnderhoud%20bronnen%5d%2014-2-2010%201025009c28.jpg?itok=ExHJRjAO)

![Reference manager - [Onderhoud bronnen]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bOnderhoud%20bronnen%5d%2014-2-2010%201025249f72.jpg?itok=IeHaYl_M)

![Reference manager - [Onderhoud bronnen]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bOnderhoud%20bronnen%5d%2014-2-2010%20102534b3cc.jpg?itok=cdKP4u3I)

![Reference manager - [Onderhoud thema's en rubrieken]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bOnderhoud%20themas%20en%20rubrieken%5d%2020-9-2009%20185626d05e.jpg?itok=zM5uJ2Sf)

![Reference manager - [Raadplegen aantekeningen]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bRaadplegen%20aantekeningen%5d%2020-9-2009%201856122771.jpg?itok=RnX2qguF)

![Reference manager - [Relatie termen (thesaurus)]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bRelatie%20termen%20(thesaurus)%5d%2014-2-2010%201027517b7a.jpg?itok=SmxubGMD)

![Reference manager - [Thesaurus raadplegen]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bThesaurus%20raadplegen%5d%2014-2-2010%2010273282f6.jpg?itok=FWvNcckL)

![Reference manager - [Zoek thema en rubrieken]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bZoek%20thema%20en%20rubrieken%5d%2020-9-2009%2018554680cd.jpg?itok=6sUOZbvL)

![Reference manager - [Onderhoud rubrieken]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%2020-9-2009%201856340132.jpg?itok=oZ8RFfVI)

![Reference manager - [Onderhoud aantekeningen]](../../sites/default/files/styles/medium/public/Reference%20manager%20v3%20-%20%5bOnderhoud%20aantekeningen%5d%2014-2-2010%201026032185.jpg?itok=XMMJmuWz)

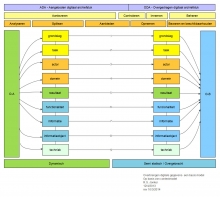

831b.jpg?itok=FCGCVxqn)

771b.gif?itok=8DxUslHw)